Depth of field (DOF) defines what is sharp and what falls into blur in a photograph or 3D render. In photography it is shaped by aperture, focal length, and subject distance. In 3D, DOF is both an artistic and technical tool, balancing storytelling with performance, especially in VR and gaming. Mastering DOF across real cameras and digital tools lets you control viewer focus, create atmosphere, and add realism to your images.

Depth of field is the range of distance within an image that appears acceptably sharp. A shallow depth of field isolates a subject with strong foreground and background blur, while a deep depth of field keeps everything from near to far in focus. Whether you are working with a full-frame DSLR, a digital single-lens reflex camera, or rendering inside Blender or Unreal Engine, DOF directs the eye, tells a story, and creates mood.

The sharpness of an image depends on how points of light are rendered on the camera sensor or film. When a point falls outside the precise plane of focus, it spreads into a blur disk. The largest blur disk still perceived as sharp is called the circle of confusion. This limit, combined with sensor size, lens focal length, and aperture, defines how much of your scene looks sharp. This old but gold video by thekinematicimage goes more in depth of the circle of confusion:

In photography, DOF is an optical phenomenon from lens design, aperture shape, and how light passes through glass elements. In 3D graphics, DOF is a simulation via algorithms such as ray-traced or post-process CoC/bokeh techniques.Modern rendering can produce results that closely match real optical behavior and these tools give 3D artists nearly the same aesthetic control over focus, blur, and bokeh as using high-end lenses, giving 3D artists the same creative power as a photographer with a Canon EF lens or Nikon F-mount.

Photographers adjust aperture, focal length, and focus distance. 3D artists mimic these with camera settings in software. But unlike physical cameras, 3D software lets you dial in hyperfocal distance with calculators, animate focus pulls, or even defy optics for stylized results, as we can see in this video by Pau Homs:

CPU-based renderers often use physically accurate ray-traced depth of field (sampling across the lens to compute DOF effects) resulting in high-quality images at the cost of longer render times. GPU renderers can deliver much faster performance through massive parallelism; some (like real-time engines) rely on post-processing blur or bokeh approximations for speed, while others, such as GPU-enabled path tracers like Blender’s Cycles with OptiX, can produce high-fidelity DOF via ray tracing. Thus, the choice often comes down to balancing render speed with desired image realism.

Offline DOF uses accurate ray tracing for photoreal results, while real-time engines optimize performance. Gamers, for example, expect smooth frame rates, so developers rely on faster, screen-space techniques, balancing immersion with efficiency.

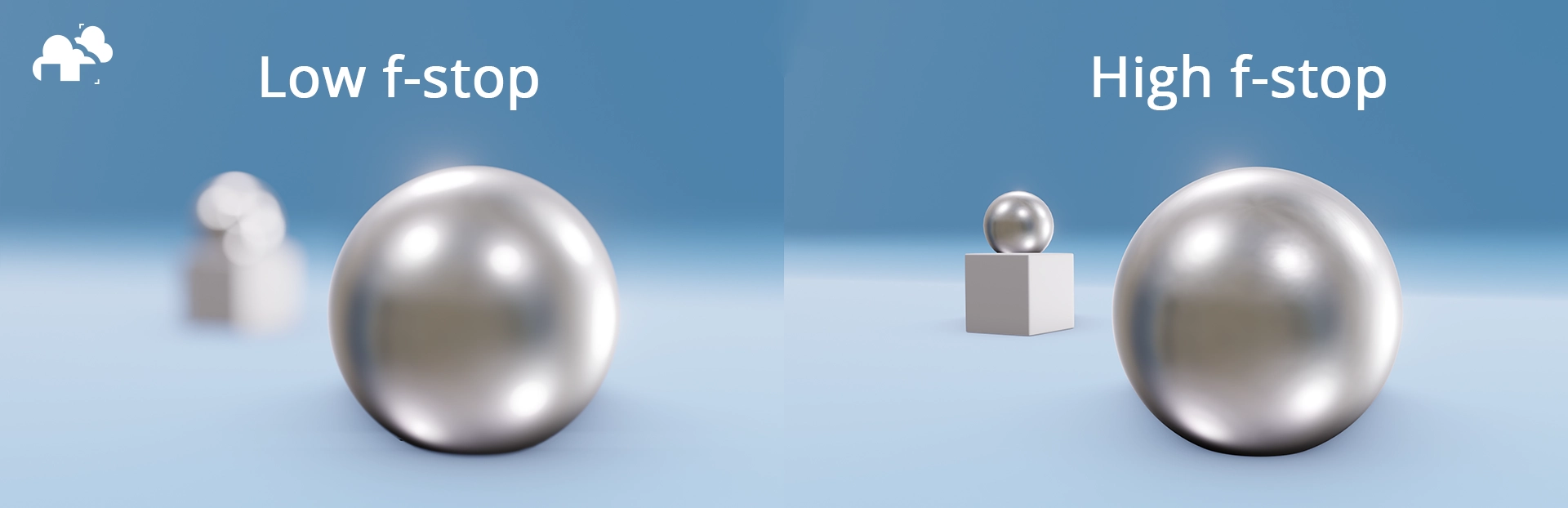

Aperture is the opening inside a lens that decides how much light passes through. A low f-stop produces a shallow depth of field with strong background blur. A high f-stop lets in less light but keeps more of the scene sharp, perfect for landscapes or product shots. In render engines, the f-stop slider works the same way, influencing both focus depth and exposure.

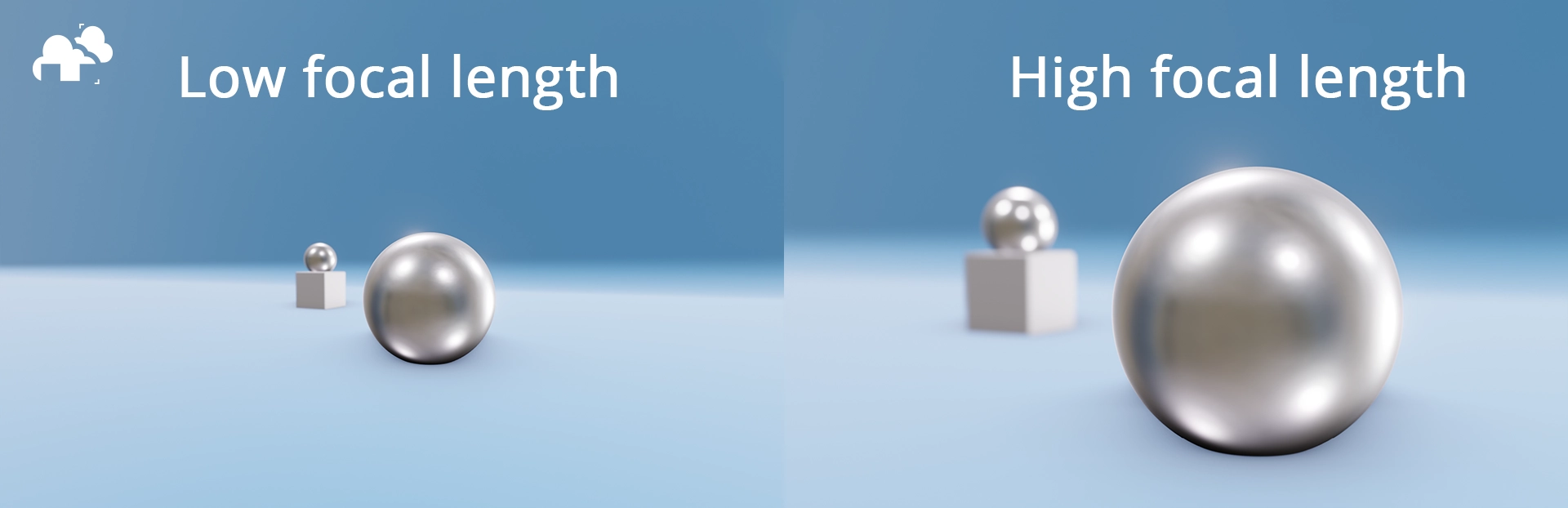

Focal length doesn’t only change how close things look, but also how space is perceived. Wide lenses (short focal lengths) capture more of the environment and exaggerate distance between foreground and background, while long lenses (telephoto) narrow the view, enlarge distant objects, and visually compress depth. In 3D, adjusting focal length affects perspective just as much as framing.

How close the camera is to your subject dramatically affects depth of field. Move in tight, and focus becomes razor thin, hence the soft backgrounds in macro shots. Step back, and the focus deepens, keeping more elements sharp. In 3D software, you can mimic this by changing the camera’s focus distance or even scaling the scene.

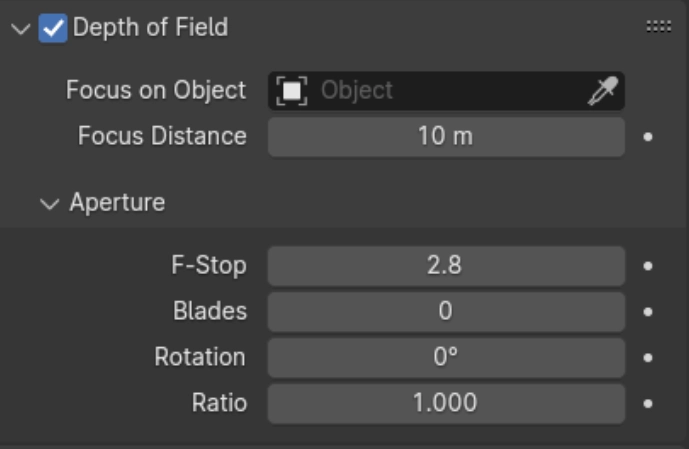

Modern 3D applications give artists a range of tools to create cinematic depth of field that mirrors real-world optics. Autodesk Maya and 3ds Max now feature physical camera models where focal length, f-stop, and sensor size behave like real lenses, allowing artists to animate focus pulls or set hyperfocal distances with precision. Meanwhile, Blender offers two distinct approaches as seen in the video below by Abe Leal 3D : Cycles delivers accurate, ray-traced DOF with natural bokeh and defocus aberrations, while Eevee provides a faster, post-process approximation that is well suited for previews and real-time visualization. Together, these workflows enable artists to balance realism and efficiency, making it possible to integrate film-like focus effects into everything from product design to feature animation.

Unlike real-time engines, offline 3D rendering for film, product visualization, and architecture often involves massive amounts of data and high-resolution outputs. Depth of field here isn’t just a stylistic effect but it’s also a computational challenge. Physically based renderers like Arnold, V-Ray, and Cycles simulate lens blur by sampling rays across an aperture, which can increase render times. To manage this, studios rely on render farms and distributed computing, spreading the workload across hundreds or thousands of cores. This allows them to achieve natural-looking DOF with accurate bokeh and defocus aberrations, even in 8K frames or multi-minute sequences. For efficiency, artists may use denoising algorithms, adaptive sampling, or render DOF as a separate pass (z-depth) to composite later. These pipeline choices let studios balance artistic intent with deadlines, ensuring that cinematic DOF remains both realistic and production-ready.

Knowing the difference between shallow and deep depth of field can help elevate 3D renders and bring more immersion through realism:

Shallow DOF isolates subjects, ideal for portrait photography, close-ups, or dramatic focus pulls in cinematography. It creates intimacy and directs viewer attention. In this video by LinkedIn Learning, we see how shallow DOF works for portrait photography and shows a quick comparison of the portrait with deep DOF.

Deep depth of field, common in landscape photography and architectural visualization, communicates scale and clarity. Wide-angle lenses and smaller apertures help achieve this. Although this video by Sam Worden is old, it still greatly showcases how deep depth of field works in photography, that can easily translate to 3D environments as seen in another example below by architect and CG artist Bartosz Domiczek:

In interactive 3D environments, selective DOF can emphasize HUD elements or guide attention to mission-critical details, blending usability with cinematic style. In website design, this can also be added in a unique way, for example, by simulating DOF through blur. In this video by Flux Academy, we see how blur can be applied behind elements to make the main elements stand out more, giving it an illusion of DOF:

With the 3D rendering market projected to grow from around $4.90 billion in 2025 to around $13.58 billion by 2030, DOF is a major driver of realism and is incorporated in various professional studios and applications.

Animation studios use depth of field sparingly, ensuring it feels natural and supports emotional beats without distracting from performance. By carefully controlling focus, they subtly direct attention to key characters or props, creating a cinematic feel that enhances immersion. This approach mirrors live-action cinematography while maintaining clarity in stylized worlds.

Studios employ DOF in game engines like Unreal Engine for cutscenes that mimic film, with rack focus shifts and shallow DOF for dramatic emphasis. These techniques help replicate the look of high-end cinema, bridging the gap between gameplay and narrative. Players intuitively understand where to look, and the focus shifts amplify the emotional tone of the story, as seen in this video by 3D college and his use of Unreal’s DOF:

Visualization specialists use DOF to add realism and guide clients’ eyes through a space, combining hyperfocal distance techniques with selective blur. It helps viewers experience the design as though they were walking through it with a real camera in hand. This not only grounds the imagery in realism but also makes the presentation more engaging and easier to interpret.

Exaggerated blur or poorly shaped bokeh can break immersion. Always reference real photographs when adjusting DOF in rendering.

Fixed depth of field in current VR headsets can contribute to visual strain due to the vergence-accommodation conflict. Incorporating gaze-contingent depth-of-field shows promise in reducing this discomfort by dynamically adjusting focus based on where users look, though widespread implementation is still limited by hardware constraints.

Overly complex DOF calculations can slow renders or gameplay. Choose methods appropriate to the medium and audience.

Looking ahead to 2025, the emergence of AI-powered monocular depth estimation is already transforming how DOF is implemented with models like DepthFM and Apple’s Depth Pro being able to generate high-resolution depth maps from single images in real time, enabling post-process blur effects without complex 3D geometry. Parallel innovations in light-field and holographic displays hint at a future where natural depth-of-field and motion parallax are rendered optically rather than via blur, offering lifelike depth cues directly through the display