Generative AI is transforming 3D modeling by enabling the instant creation of complex digital assets from 3D models, images, or video. With the generative AI 3D-asset market currently valued at around 1.63 billion USD and projected to reach 9.24 billion USD by 2032, AI-powered modeling is reshaping industries from gaming to architecture. Leading tools like NVIDIA’s GET3D and Meshy make high-quality modeling accessible to beginners while empowering professionals with faster workflows and greater creative freedom.

Generative AI is a type of artificial intelligence that uses deep learning and machine learning models to produce new data, such as images, videos, or 3D assets. In 3D modeling, this means creating shapes, textures, and environments from simple prompts or reference images.

Traditional 3D modeling often required hours of manual polygonal modeling, sculpting, and texturing. Generative AI tools on the other hand can produce comparable results in seconds, unlocking workflows that were previously limited to studios with large teams.

Traditional modeling relies on skilled artists manually shaping geometry using software like Blender, Maya, or 3ds Max. Generative AI models, powered by neural networks, diffusion models, and transformers, can generate entire assets by analyzing training data and learning patterns of realistic form and texture.

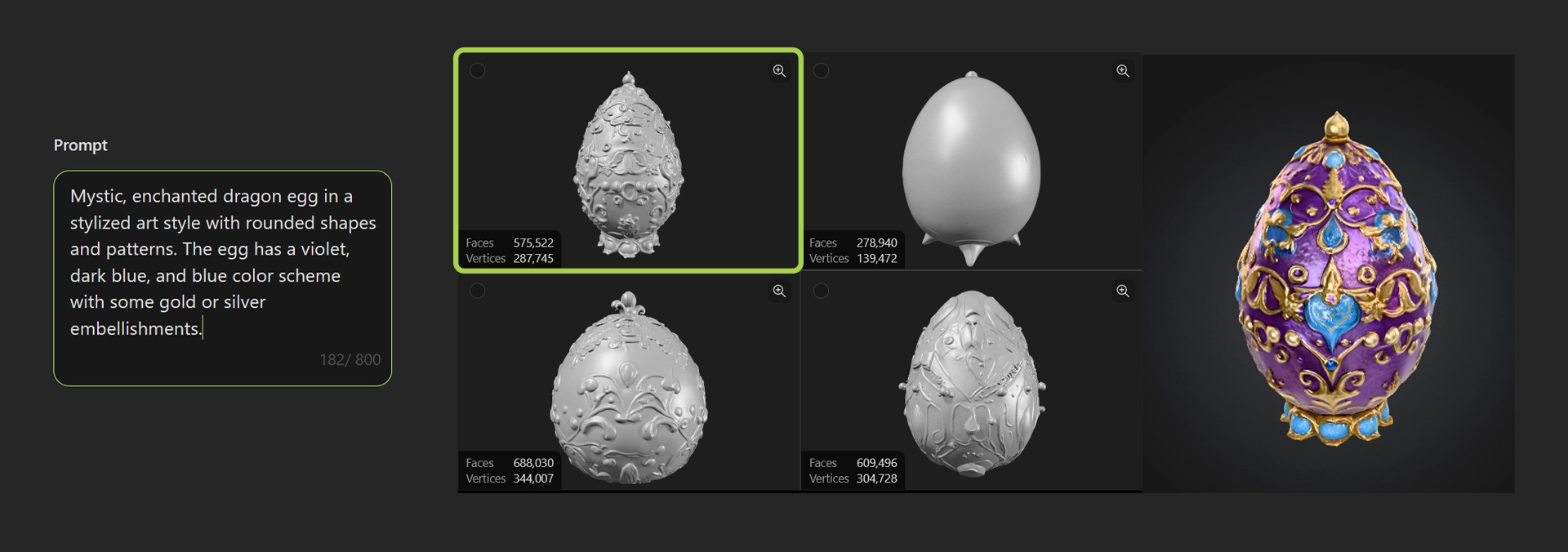

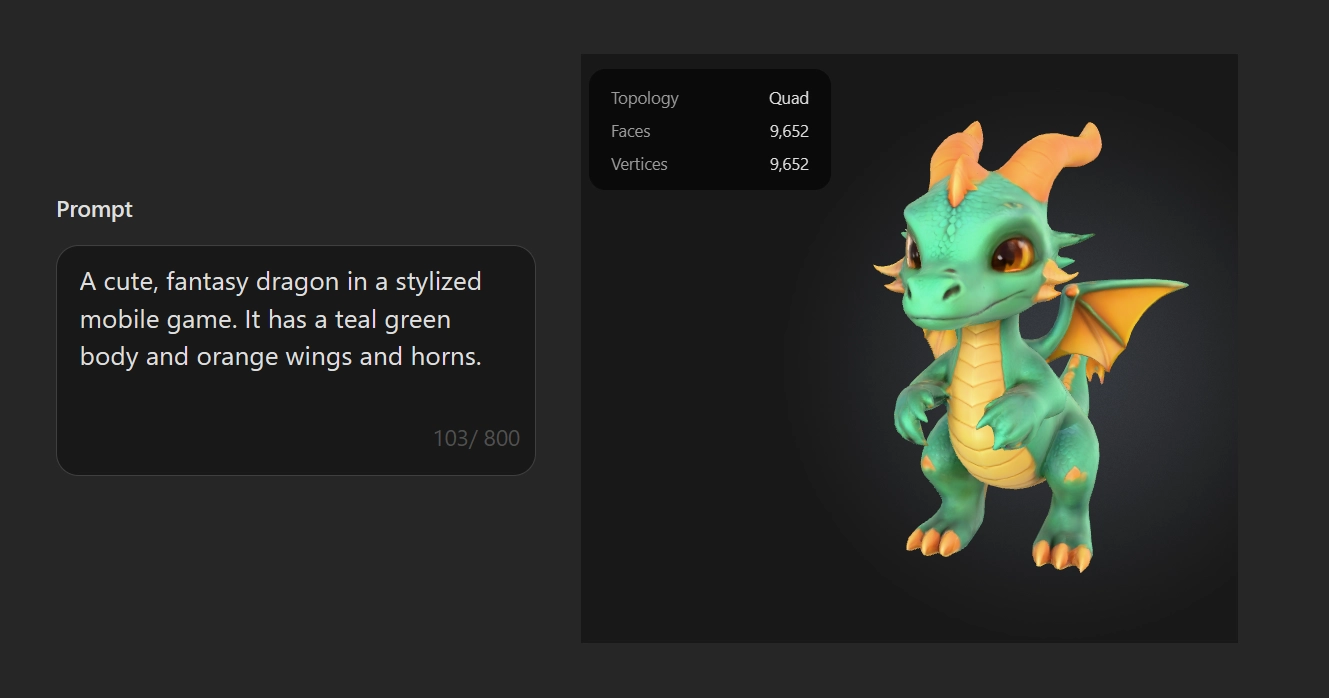

Text-to-3D: Write a prompt and get a model. Tools like GET3D and Meshy create usable meshes and textures.

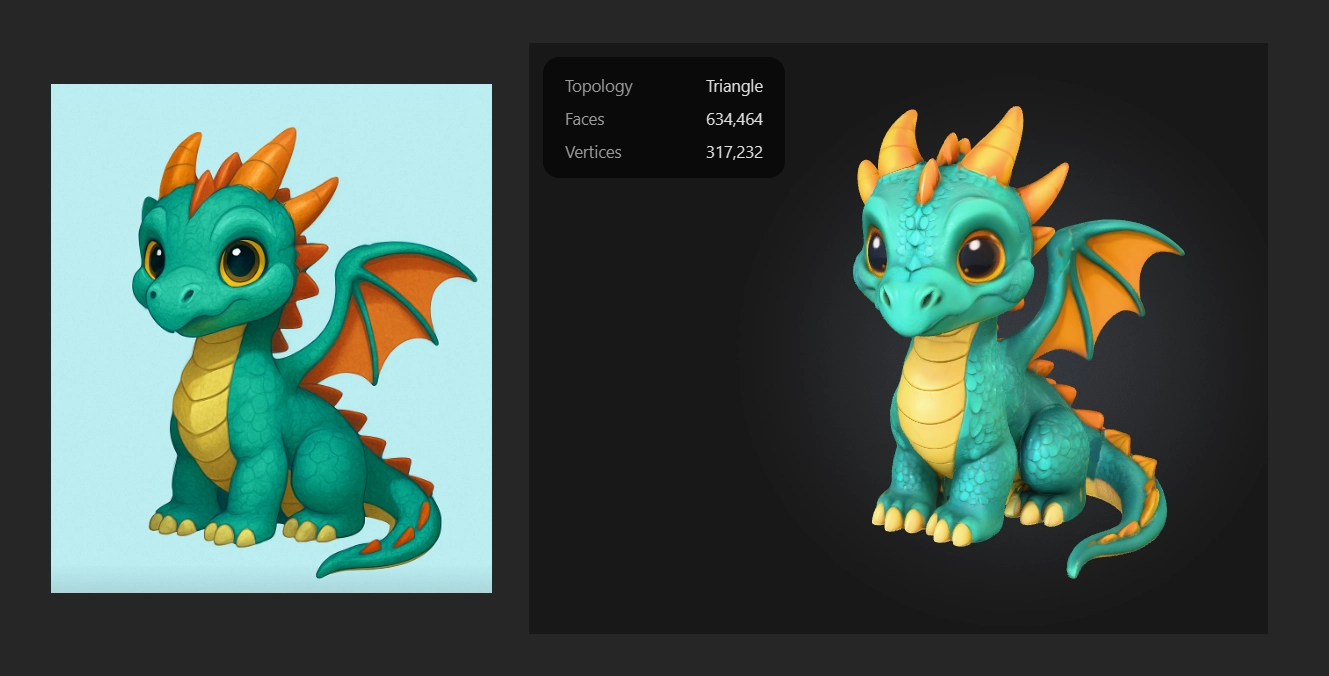

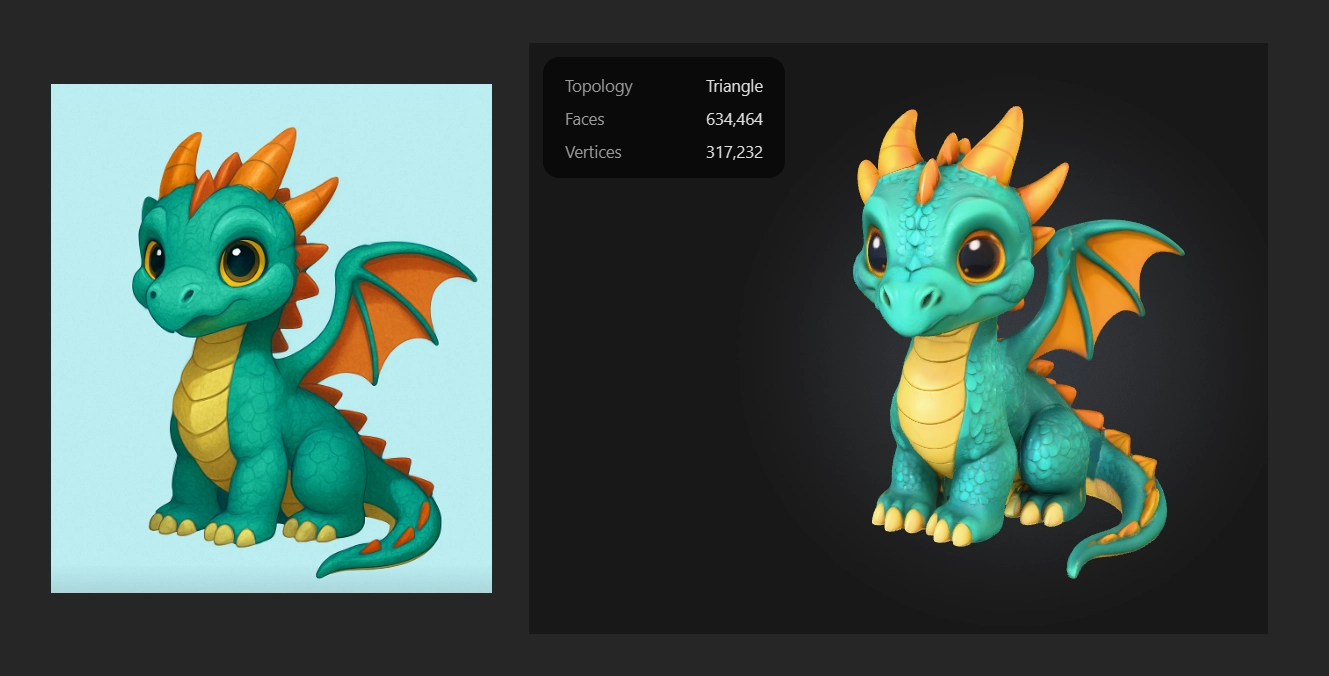

Image-to-3D: Upload an image and receive a 3D reconstruction. This is ideal for e-commerce and product visualization, or projects that already have existing concept art.

Video-to-3D: Convert video sequences into volumetric models, useful for motion capture and animation.

Generative artificial intelligence compresses the timeline of production. What once required hours of manual modeling now takes seconds. This accessibility means creators without deep technical training can still produce assets, while professionals accelerate their pipelines.

Early generative models took over an hour to render usable 3D shapes. Today, diffusion models and generative adversarial networks can produce results in under a dozen seconds. As Sanja Fidler, VP of AI research at NVIDIA, explains:

“We can now produce results an order of magnitude faster, putting near-real-time text-to-3D generation within reach for creators across industries.”

Real-time 3D generation opens the door to interactive design. Imagine adjusting a prompt and instantly previewing changes in a gaming environment or architectural walkthrough.

By automating labor-intensive tasks, generative AI reduces production costs. Studios save on man-hours, while freelancers gain access to workflows previously reserved for large teams.

With the AI-generated 3D asset market valued at $1.63 billion in 2024 to $9.24 billion by 2032, 78 percent of organizations already use AI in production workflows, and 86 percent of employers believe AI will reshape their businesses by 2030. Various industries are being transformed by AI 3D generation through its usefulness and speed and the top 3 most notable industries as of date are gaming and entertainment, architecture, and e-commerce and product visualization.

Game developers use generative AI to populate environments with props, as well as diverse characters and landscapes. This reduces repetitive asset creation and increases world-building efficiency. Sunny Valley Studio showcases this by using text prompts to generate 3D game assets:

Architects can input floor plans or sketches into generative models to produce instant 3D visualizations. This accelerates client presentations and reduces iteration cycles, giving more freedom for experimentation and even creativity. This video by Urban Decoders showcases this with the use of Nano Banana AI:

Retailers leverage image-to-3D workflows to create realistic product models for virtual showrooms and augmented reality shopping experiences, as well as archviz scenes. In this video by Emunarq, we see how a product photo can be quickly turned into a 3D model and incorporated into a 3D scene

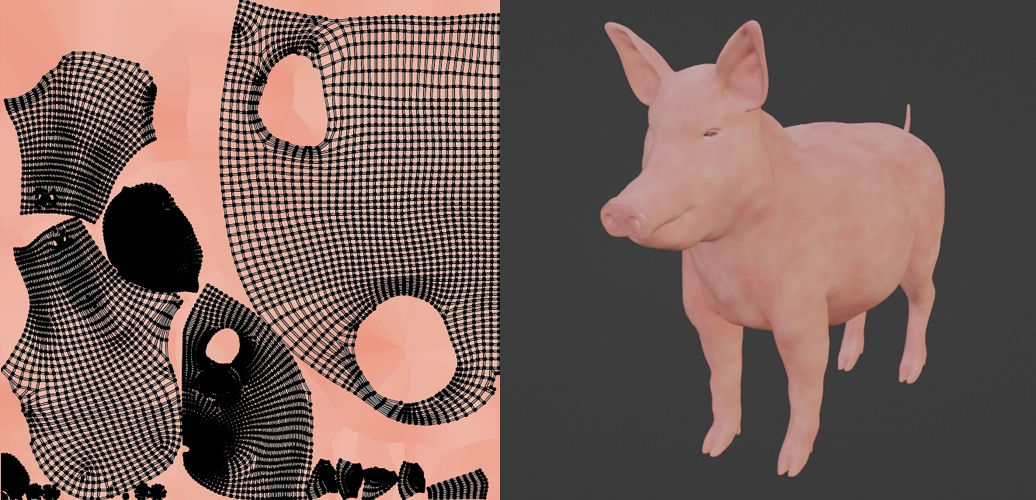

GET3D generates high-quality textured models directly from images. Integrated with NVIDIA Omniverse, it allows seamless collaboration across design, simulation, and virtual production pipelines.

While still a proof of concept, Autodesk’s Project Bernini is designed for “Design and Make” industries (product design, architecture, manufacturing , etc.) and focuses on generating functionally plausible 3D shapes, with separate handling of geometry and texture.

Online platforms such as Meshy, Tripo, Sloyd, GET3D, and 3D AI Studio cater to hobbyists and small creators, providing accessible tools that integrate with traditional 3D workflows while requiring minimal technical expertise. These tools demonstrate how web-first tools are lowering barriers for rapid prototyping, concept art, and casual 3D creation, which further expands the accessibility to various creators.

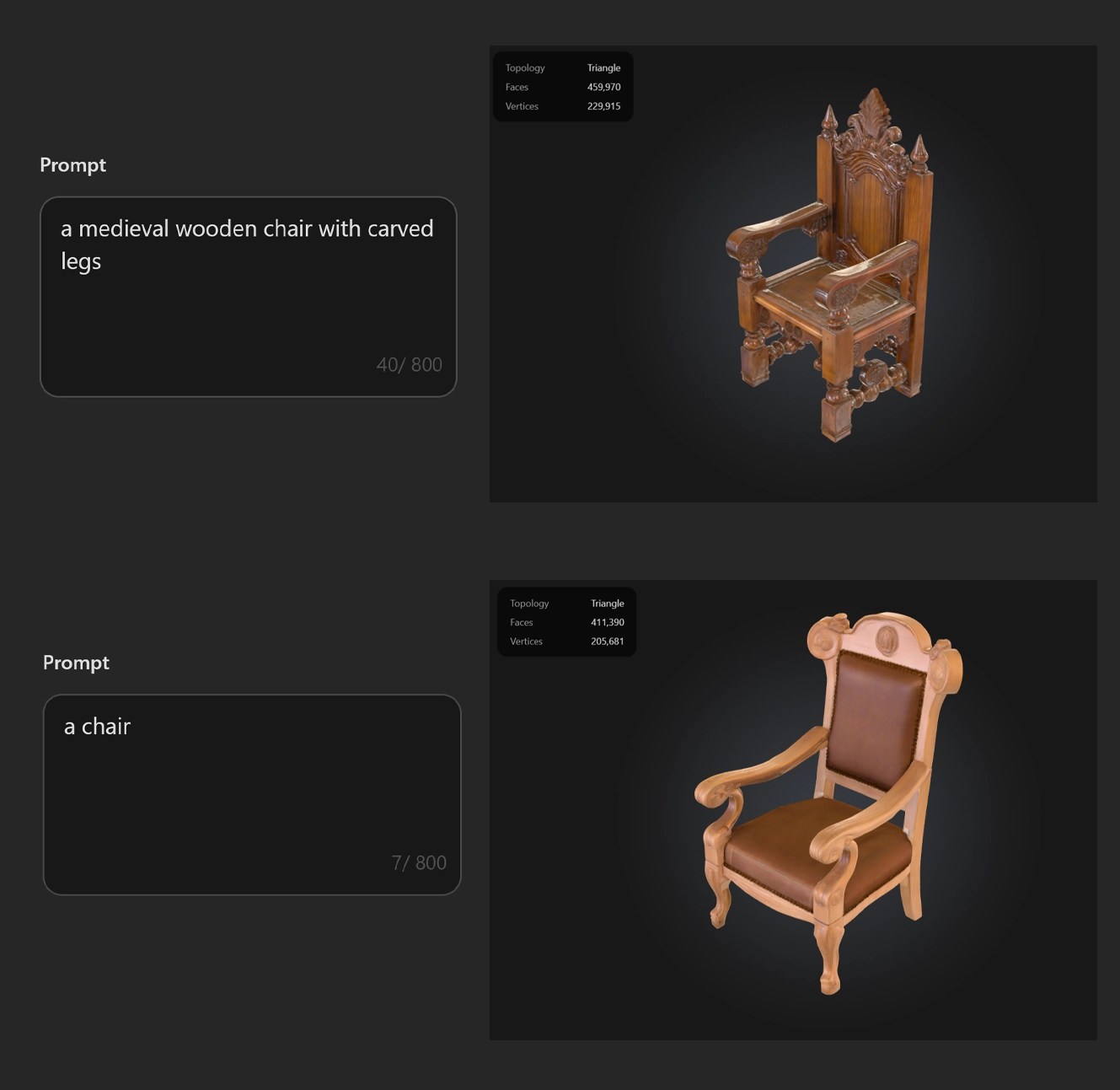

Prompt engineering is critical. Specificity leads to better results: “a medieval wooden chair with carved legs” produces more accurate geometry than simply asking for “a chair.”

Using high-quality reference images ensures better reconstructions. Multiple angles help diffusion models approximate accurate depth and geometry.

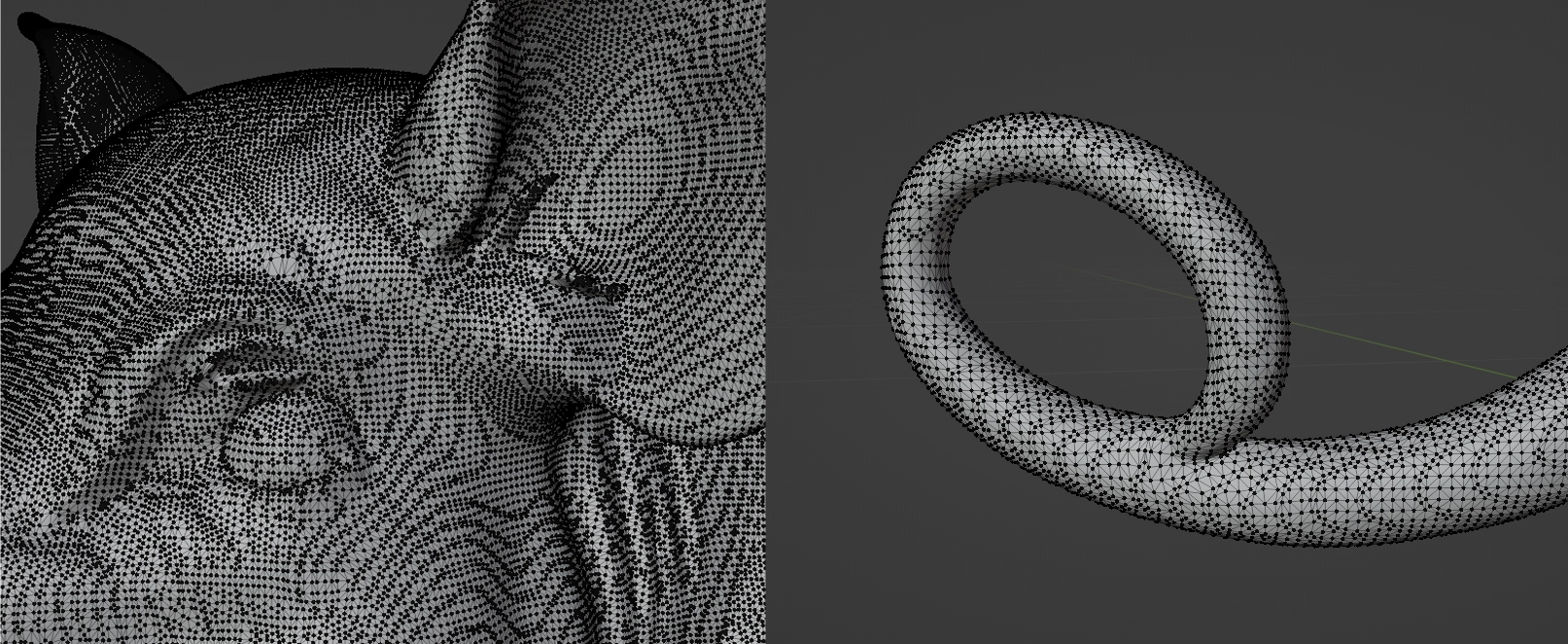

AI-generated assets often require refinement. Artists import outputs into Maya, Blender, or 3ds Max for retopology, UV mapping, and fine-tuned texture work.

Generated models may include non-manifold geometry or structural inaccuracies, requiring cleanup before production use.

Textures can lack resolution or realism, particularly for reflective or transparent materials. Artists often supplement AI textures with traditional shading techniques. UV maps can also be quite messy, leading to a harder time adjusting the textures.

Different generative AI tools export in various formats. Converting and standardizing assets can be time-consuming, especially in pipelines with strict requirements.

Generative AI is best seen as a co-pilot. It automates repetitive tasks but leaves creative direction and refinement to human artists.

Artists must learn to guide AI models with precise prompts, integrate generated assets, and refine results. Prompt engineering, data curation, and AI tool fluency are emerging skill sets.

New roles are appearing, such as AI pipeline specialists and virtual world curators. Still far from replacing artists, generative AI expands opportunities for them. As of date, there are already existing jobs such as clean-up artists whose role is to clean up the meshes of AI-generated 3D assets.

Generative AI is transforming medical imaging by producing synthetic scans and modeling anatomical details from patient data, which can aid in diagnostics, surgical preparation, and training. Approaches such as Latent Diffusion, used for generating detailed brain images highlight how these techniques enrich available data and enhance the clarity and accuracy of clinical visualization.

AI-powered digital twins (virtual replicas of physical systems) enable real-time testing, predictive maintenance, and performance optimization of real-world machinery through continuous simulation and data-driven analytics. It can be used to create various engineering parts as well, as seen here by MecAgent’s showcase on how AI can be useful tool for engineers:

Robotics researchers use AI-generated 3D environments to train autonomous agents, reducing reliance on costly real-world testing. In this video by BuzzRobot, guest speaker Fan-Yun Sun talks about how AI can be used to create 3D worlds for agent training.

The future of AI-generated 3D content points toward real-time collaboration on platforms (like Spline), with generative models integrated into those shared platforms that let teams co-create regardless of location. Generative 3D AI tools are also being woven into VR, AR, and metaverse environments, enabling instant asset generation for designing immersive worlds on the fly. At the same time, generative AI is democratizing 3D content creation by lowering barriers to entry, making modeling accessible to educators, marketers, and hobbyists alike, broadening participation, fostering diversity of content, and accelerating innovation.

Generative AI in 3D modeling is not about replacing artists but amplifying them. With tools like NVIDIA GET3D, Meshy, and the upcoming Autodesk Bernini, entire industries are accelerating workflows, cutting costs, and unlocking creativity. As models become faster and more accurate, the line between manual design and AI generation will blur, creating a new era of digital creation.