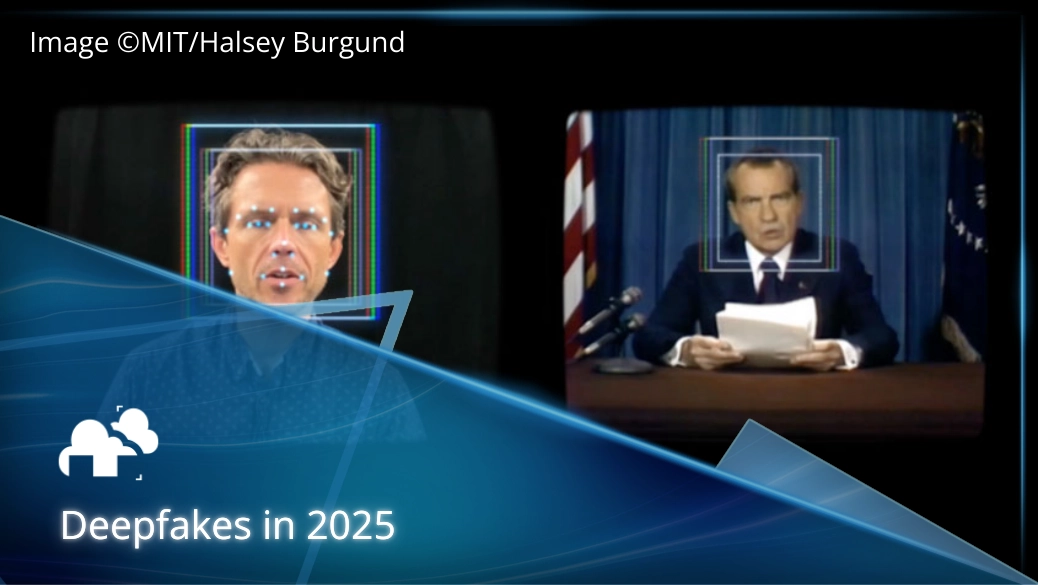

• Deepfake video generators are becoming more powerful and easier to use.

• In 2024, many businesses reported deepfake fraud losses in the hundreds of thousands of dollars.

• Most people cannot reliably distinguish high‑quality deepfake videos from real footage.

• Tools now range from beginner friendly platforms to advanced software used in film and content creation.

• Legal and ethical use of deepfakes requires consent and responsible practices.

Deepfake video generators use artificial intelligence to create highly realistic synthetic video content that can replace or manipulate faces and voices. These tools are widely accessible in 2025 and find use in marketing, entertainment, education, and storytelling workflows. The market is growing rapidly, but so are misuse risks and fraud cases. Protecting yourself and your business means understanding how the technology works, knowing legal boundaries, and using verification systems.

Deepfake video generators use machine learning models to analyze data from real videos and images, then create new content that looks like a specific person speaking or moving. This usually involves training an AI on many images and video frames and then synthesizing a new video where a target’s face or voice is recreated convincingly.

At the core of these tools are machine learning algorithms that learn patterns from large sets of video and image data. These models can map expressions and lip sync to new audio or text inputs to produce lifelike results. Advances in generative AI mean video can now be produced from straightforward text prompts in some applications.

Early deepfake systems used generative adversarial networks which pit two neural networks against each other to improve realism. Recent platforms add transformer based models and other large scale architectures that make workflows faster and outputs more convincing. This shift reflects broader changes in artificial intelligence research and how AI synthesizes complex human information.

Today’s landscape includes tools for everyone from novice creators to advanced developers.

HeyGen is known for letting users choose from many avatar templates to generate videos without coding or deep technical expertise. It dresses up AI generated content in professional workflows for presentations, training, and short form content (HeyGen). Digital twins are also getting popular to make, as we can see in this video by Nicky Saunders:

DeepFaceLab remains popular with more technical users who want control over each step of the deepfake creation process. It is powerful but requires more computing power and learning than commercial options (ScienceDirect).

Platforms like Kapwing, Synthesia, and Pinokio combine visual editing tools with AI video generation features. They integrate APIs and simple interfaces so anyone can produce lifelike AI video clips without heavy technical know how.

Pricing varies widely between platforms and affects user experience significantly. Free plans can let you experiment but usually limit video resolution, duration, or watermark outputs. These are useful for testing workflows but not for professional uses.

Paid tools often use subscriptions or credits to unlock more features, faster processing, and better avatar selection. For example many commercial tools offer tiered plans for marketing teams or educators.

When you scale workflows to high resolution or longer clips, compute time and storage adds up. Longer export times or slower rendering can affect schedules and budgets. If the generated output is also not up to standard, reiterations or do-overs can also add to the costs.

Deepfake video generators open creative doors but also legal and ethical questions:

Companies use AI avatars to produce training videos in multiple languages and create marketing content faster than traditional filming. These tools reduce costs and make user generated content possible at scale. An example of using multiple languages is this video by Mike Russell, where he used AI to change his expressions and language and synced them together:

Filmmakers and creators experiment with synthetic media for visual effects and storytelling. The technology can augment animation or help produce scenes that would otherwise be costly to film.

In discussion about synthetic media, AI expert Nina Schick points out that deepfakes are just one form of visual disinformation within the broader media ecosystem and will influence how content is created and perceived in all industries (80,000 Hours).

Using someone’s likeness without permission can violate personality rights or other laws. Consent forms and transparent disclosure help protect creators and subjects. In many jurisdictions, failure to obtain proper consent can lead to legal penalties, takedown orders, or even criminal charges, especially when the deepfake content is used in a misleading or harmful context (Agility PR Solutions).

More than creative tools, deepfakes are also being used for scams and fraud:

In multiple cases employees were tricked into transferring millions to fraudsters posing as executives using synthetic video calls. These scams show how convincing high quality deepfake content can be.

Experts warn that as deepfakes get better, humans will struggle to tell real from fake. According to research quoted by ABC Radio, AI generated content will soon be so pervasive and good that the naked eye may not distinguish fake from real (ABC).

"If anything can be faked, then everything can be denied." - Nina Schick, author of Deepfakes: The Coming Infocalypse

Look out for inconsistent lighting, unnatural movement, or audio mismatches. These subtle clues can indicate synthetic content. There can also be shifts in the quality where there is a Deepfake, and other subtle signs that just aren't right.

For critical actions require secondary confirmation through a separate channel like a phone call or secure authentication code system if you are dealing with a superior, co-worker, or the like.

Automated AI detection tools can help, but must be updated continuously as deepfake generators evolve. No system is perfect yet so it is still important to train yourself and your eyes, and not rely too much on detection systems.

Top tier generators now produce full HD or even higher resolution results that look very much like original footage. Lip sync and emotion portrayal is often credible. Although it is not 100% perfect yet, the results can still be scary good such as this example from Sara Dietschy:

High quality video takes longer to generate and results in large files that need storage and bandwidth consideration. This is something to note especially for your intended use of deepfakes.

Even the best deepfakes sometimes show minor irregularities around eyes, mouth, or shadows that experts use as clues to their synthetic nature. This is important to remember and to perform quality checks to ensure the best deepfake possible.

The deepfake technology market is expanding rapidly with strong investment in AI tools that support content creation, marketing, education, and software workflows. In the case of regulatory responses and platform policies, governments and technology platforms are creating policies around disclosure, consent, and how AI created media should be labeled or governed.

As tools for generating synthetic video improve, so will the tools for detecting and authenticating real content. This dynamic shapes how the technology will be used responsibly.

Technology investor Reid Hoffman, who experimented with his own AI clone and emphasized ethical use, said that deepfakes are not inherently bad if applied responsibly but also warned of misuse risk if systems are not controlled properly (Business Insider).

"What we're trying to do, as technooptimists say, is how do we shape it so it's applied well."

In creative circles, some visual effects specialists involved in early deepfake demonstrations have described the potential of AI tools to democratize content creation, allowing creators without traditional film technology to produce convincing scenes ordinarily only possible in large studios.