GPU rendering uses specialized graphics hardware to accelerate the creation of 3D images and videos through parallel processing, outperforming CPUs in speed for tasks like animation and gaming. This guide explores its role, comparisons with CPU rendering, top hardware choices for 2025, and more. Key highlights include real-world applications in different industries ranging from film production and architectural visualization and a quick guide on how to use GPUs effectively. Whether you're a beginner or a pro, GPU rendering transforms workflows.

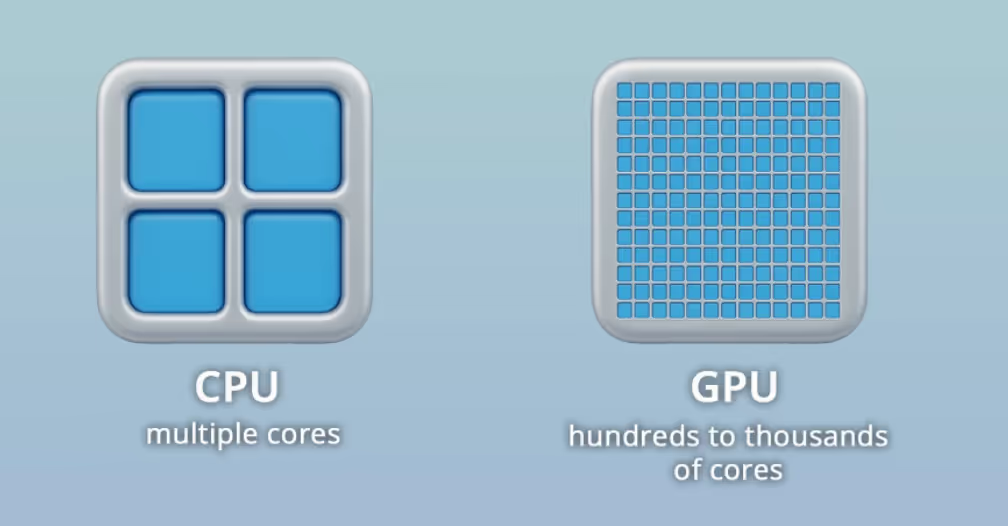

GPU rendering is the process of using a graphics processing unit to compute and generate images from 3D models. Unlike the central processing unit (CPU), which is optimized for handling a few complex tasks in sequence or across a small number of cores, the GPU is built to run thousands of lightweight threads at the same time. This massive parallelism makes GPUs especially effective for rendering tasks such as shading, texture mapping, ray tracing, and global illumination.

In modern 3D rendering, GPUs handle enormous amounts of geometry and data with hardware acceleration, enabling effects that were once computationally prohibitive. APIs such as Vulkan, DirectX, and OpenGL, along with frameworks like CUDA and OptiX, allow render engines to harness GPU power efficiently.

Today’s creative pipelines rely heavily on GPU rendering and power a wide range of industries and software. Below are some of the most common workflows where GPU rendering plays a critical role:

Software like Blender, Autodesk Maya with Arnold GPU, and Cinema 4D with Redshift enable artists to preview and render scenes in real time. By offloading intensive computations to the GPU, these tools shorten iteration cycles, giving animators and modelers the freedom to refine details without long wait times. Below, Game Dev Academy shows how to enable GPU rendering in Blender:

Architects increasingly rely on GPU-accelerated tools such as Lumion, Enscape, and Twinmotion to produce interactive visualizations. With real-time lighting previews and photorealistic renders, design changes can be assessed instantly, transforming the way architects present concepts to clients and stakeholders. In this video by Arch Viz Artist, we see how GPU rendering is time saving while retaining good quality:

Studios use GPU-powered engines like OctaneRender and Redshift to render complex visual effects and sequences in a fraction of the time once required. GPU rendering also integrates into production pipelines with Houdini and Maya, making large-scale animation projects faster and more flexible. In this video from PNY Technologies, VFX artist Josh Harrison talks about the benefits of GPU rendering for artists:

For more specific use cases such as texture painting and simulations, check this article on 5 different use cases of GPUs in 3D production.

Both CPU and GPU rendering are viable, but they excel in different ways.

Modern CPUs (typically based on x86 or ARM architectures), feature fewer cores optimized for complex, serialized computations. This makes them highly capable at tasks that require precision, complex logic, or sequential processing.

GPUs, by contrast, feature thousands of smaller cores optimized for parallel workloads or tasks. Their architecture makes them unmatched for accelerating shaders, image processing, and effects such as noise reduction, as well as workloads that are massively parallel. As of 2025, the GPU market stands at USD 82.68 billion, with an increased demand for AI-centric compute in industries ranging from AI training and inference to others like cloud gaming.

In 2025, hybrid rendering pipelines can become common. Neural rendering techniques and AI-enhanced pipelines blur the line further, enabling scalable, high-quality outputs across platforms.

GPUs are typically the go-to option when speed and scalability are the priority. For example, an artist working in Blender or Maya who needs fast preview renders during the creative process will benefit from GPU rendering, since it delivers quick feedback and smoother iterations. In larger studios, multi-GPU setups connected with technologies like NVLink allow render farms and high-end workstations to handle massive animation sequences or real-time visualization projects efficiently.

CPUs, on the other hand, remain crucial for tasks where precision and reliability outweigh raw speed. For instance, a VFX pipeline running complex physics simulations (like water, fire, or cloth) may lean heavily on CPUs, as they manage large datasets more effectively and ensure consistent, stable results. Similarly, when rendering scenes that exceed GPU memory limits, CPUs can be the fallback.

In short: use GPUs for speed, previews, and interactive workflows, use CPUs for large-scale simulations, memory-heavy final renders, or pipelines where absolute accuracy and stability are non-negotiable. For a more in-depth look, check this article on CPUs vs GPU rendering.

Real-world example: According to NVIDIA and SC24, Pixar has increasingly leveraged NVIDIA’s GPU technology for feature films, as well as high performance computing (HPC) and artificial intelligence (AI) for simulation and pipeline automation.

A GPU’s strength lies in its architecture. While a CPU typically has 4-8 cores, a modern GPU can contain hundreds to thousands of cores designed for things like matrix and vector calculations. Memory also plays a role, as VRAM allows GPUs to store high-resolution textures and geometry. And with the rise of artificial intelligence, NVIDIA CEO Jensen Huang also noted at the Goldman Sachs Communacopia + Technology Conference :

“[...]We can’t do computer graphics anymore without artificial intelligence.[...]”

Getting started with GPU rendering doesn’t require deep technical expertise, but it does involve understanding how to configure your system so that rendering engines take full advantage of your graphics card. Whether you are working in 3D modeling, video editing, or visualization software, the following principles apply in which artists and developers can maximize the benefits of GPU rendering, achieving faster workflows, smoother previews, and production-quality results in less time, regardless of the specific software they use:

Check that your GPU supports the rendering engine you want to use (e.g., CUDA, OptiX, HIP, or Metal). It’s also nice to remember to keep your GPU drivers up to date, since rendering engines are optimized for the latest versions.

Rendering can consume significant VRAM so optimize your scene by reducing unnecessary geometry, lowering texture sizes, or using instancing techniques. For heavy projects, consider GPUs with higher memory capacity or multiple GPU setups.

Use GPU-specific features like AI denoising, adaptive sampling, and ray tracing acceleration for faster, cleaner results. You can also balance real-time previews with final render settings. Draft renders can use fewer samples, while final outputs can push GPU performance for higher quality.

Many studios use a combination of GPU and CPU rendering. The GPU handles fast previews and iterative changes, while the CPU may still be used for simulations, physics, or final-quality calculations. Some rendering engines allow switching seamlessly between GPU and CPU, giving flexibility depending on project needs.

Rendering pushes GPUs to sustained workloads. Ensure your workstation has sufficient cooling and a reliable power supply to prevent throttling or crashes.

Modern GPUs integrate AI-powered tools like NVIDIA’s DLSS or OptiX, which can drastically cut down render times without sacrificing detail. It has been around for a significantly long time and still continues to be a great tool in rendering. APIs like Vulkan, DirectX, and OpenCL ensure broader compatibility across rendering platforms.

In 2025, the NVIDIA RTX 5090 stands as the top choice for professionals, offering unmatched rendering speed, large VRAM, and advanced ray tracing. The RTX 4080 Super provides strong performance at a lower price, making it a solid value option, while the AMD RX 7900 XTX delivers competitive performance per dollar, though it trails in ray tracing and AI features. For Mac users, Apple’s M4 Max chip offers excellent efficiency and hardware-accelerated rendering within optimized software, though it cannot match the raw power of high-end desktop GPUs.

GPU rendering has reshaped 3D computer graphics and workflows across industries. By exploiting parallel computing and specialized architecture, GPUs have become the backbone of real-time visualization, gaming, film, and design. While CPUs still play an essential role, particularly in precision-heavy tasks, the future belongs to hybrid systems where both processors work together. If you are entering the world of 3D modeling, animation, or architectural visualization in 2025, mastering GPU rendering is no longer optional. It is the standard.