In the world of 3D computer graphics, few techniques have had as profound an impact on realism as Global Illumination (GI). From the rich bounce of ambient light across a character’s face to volumetric fog slicing through sunbeams in a dense forest, GI simulates how light behaves in the real world. In this article, we’ll explore how global illumination works, how it’s implemented, and why it’s crucial for achieving lifelike results in both offline and real-time computer graphics.

At its core, Global Illumination refers to the simulation of indirect light in a scene, light that bounces off surfaces rather than coming directly from a light source. Unlike direct lighting, which includes the light beam that travels in a straight line from a lamp or the sun, GI calculates the diffuse reflection, specular highlight, and color bleeding caused by surfaces reflecting and scattering light.

Path tracing simulates light by sending rays from the camera into the scene, bouncing them off surfaces, and calculating how they scatter. This technique excels at realism and is used in film production and high-end visual effects but requires significant computation, making it less suited for real-time graphics.

Light mapping is a precomputed GI method where indirect lighting is baked into texture maps during development. This technique is widely used in real-time applications like games, as it offers excellent visual results with low performance overhead. Light maps are especially effective for static scenes, capturing indirect bounce light and subtle shadows without runtime cost.

To bring GI into the real-time domain, engines like Unity’s High Definition Render Pipeline (HDRP) use probe-based lighting systems. This involves strategically placing light probes in the environment to capture and interpolate indirect lighting information. At runtime, objects sample lighting from these probes, allowing for convincing indirect illumination that adapts to scene complexity without the need for expensive ray tracing.

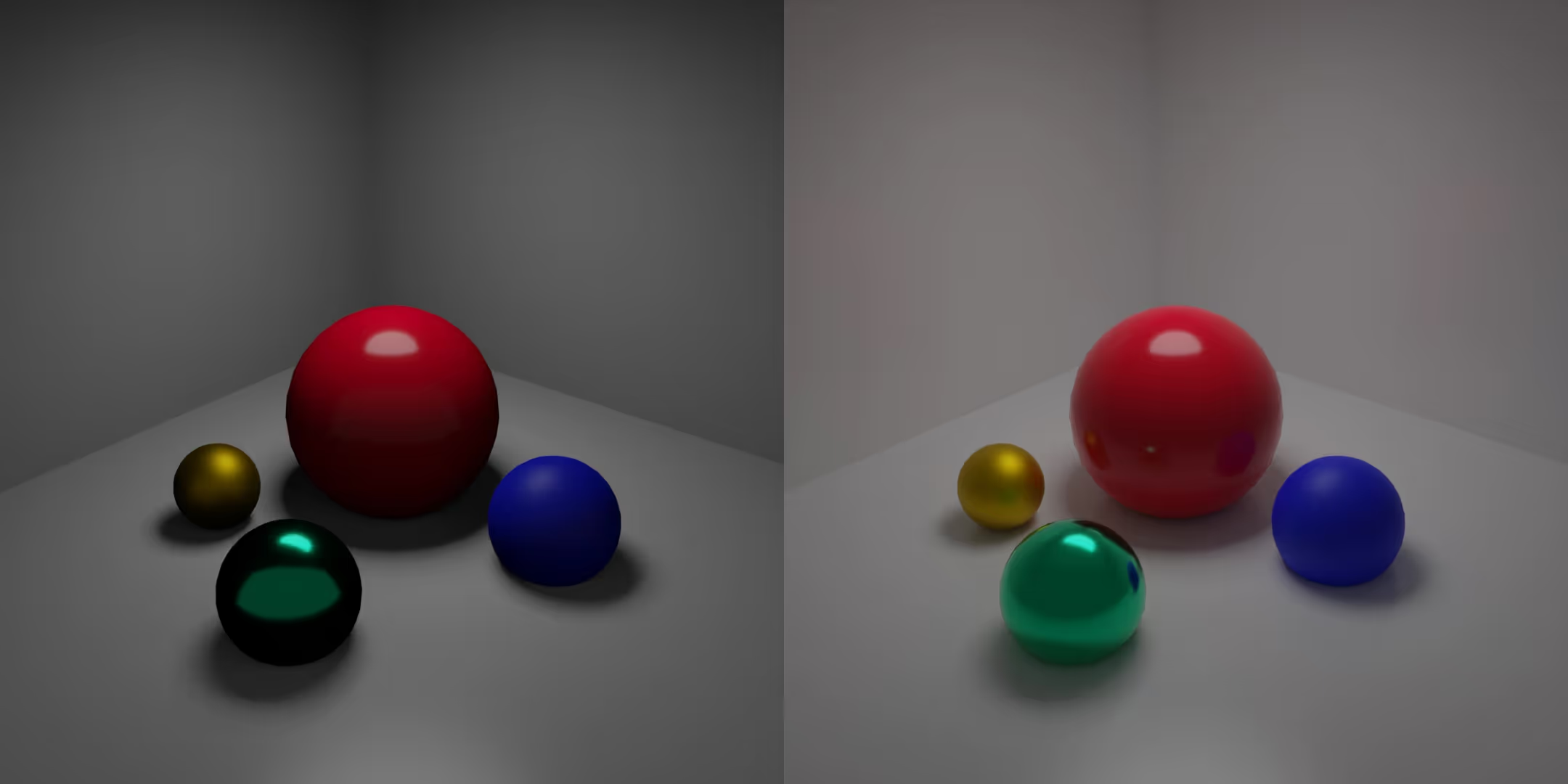

Ray tracing has revolutionized how we simulate light in digital environments by accurately modeling how rays travel, reflect, and refract through a scene. One of its defining strengths is multibounce lighting, the ability to capture how light reflects off multiple surfaces before reaching the viewer. This adds subtle interplay between colors, softens indirect shadows, and greatly enhances realism. In both offline and real-time rendering, multibounce GI helps create more natural lighting transitions and believable materials. Paired with modern sampling and denoising techniques, ray tracing brings us closer than ever to rendering that not only looks real but feels convincingly lit.

Different light sources emit light differently in GI systems:

All of these can emit volumetric light, casting beams of light and god rays when interacting with fog, smoke, or transparent objects.

Image-Based Lighting (IBL) uses high-dynamic-range images (HDRIs) to simulate ambient light. This technique projects a 360° environment onto the scene, allowing objects to receive indirect light and reflections that match the background image, thus increasing visual coherence.

In offline rendering and baked lighting pipelines, IBL can be combined with lightmaps, precomputed textures storing lighting information per surface. This dramatically improves performance in static scenes and is common in video-games.

Global Illumination remains at the heart of how we bring digital scenes to life. As rendering technologies continue to advance, our ability to simulate how light interacts with surfaces becomes more nuanced, immersive, and artist-friendly. Whether you're pushing visual fidelity or refining performance, mastering the principles of GI is key to creating believable and compelling environments. The future is bright, and it’s globally illuminated.